A brief overview:

Evidence-based practice (EBP) is integrating the best available evidence with clinical expertise and considering patients' values and specific circumstances to inform management practices and health policy decisions.

Incorporating EBP into clinical settings is crucial for supporting healthcare professionals in making informed clinical decisions.

However, the adoption of EBP is frequently impeded by a lack of support and various challenges, including limited time and a shortage of appraisal expertise, which obstruct the regular use of up-to-date, high-quality evidence in clinical decision-making.

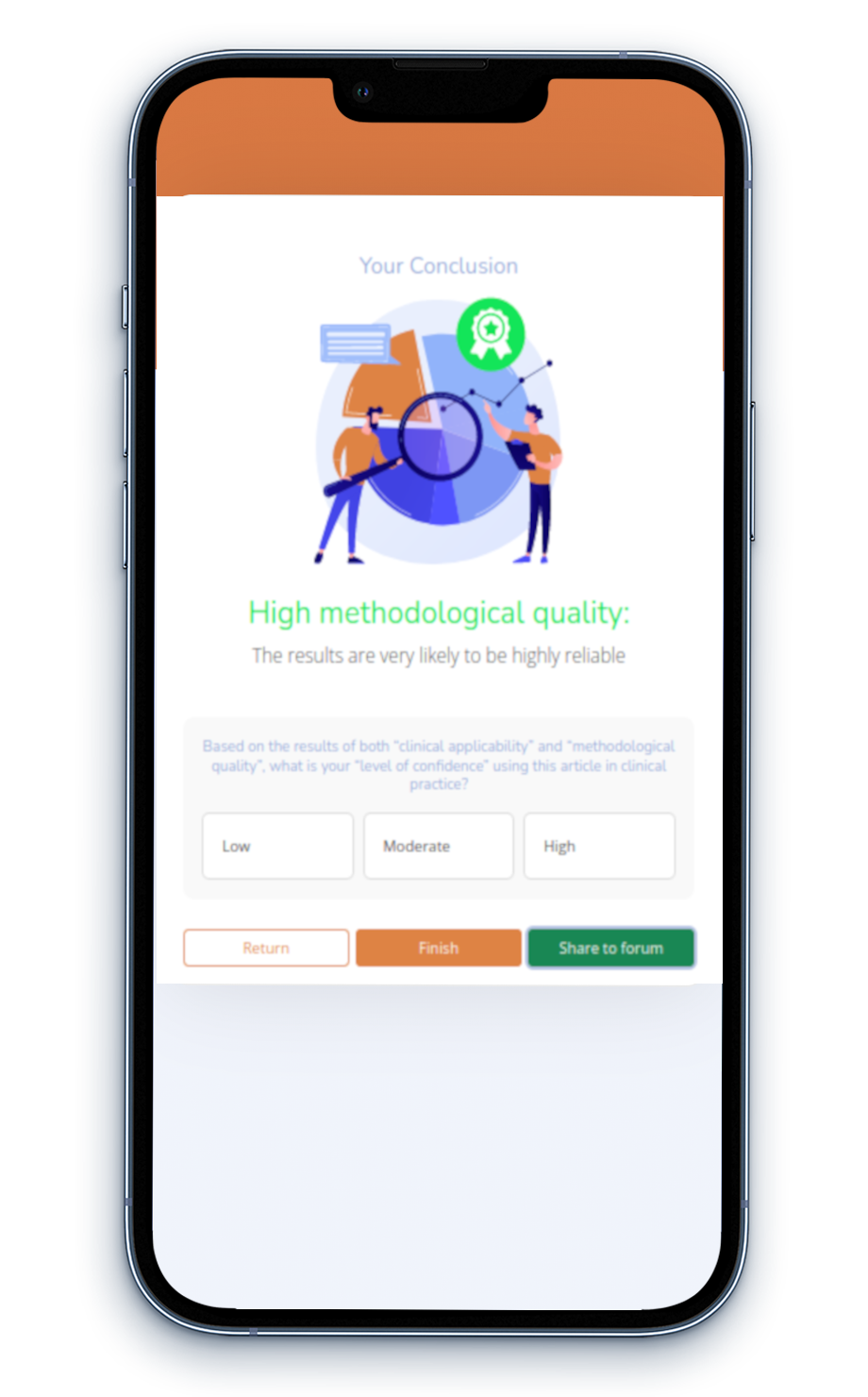

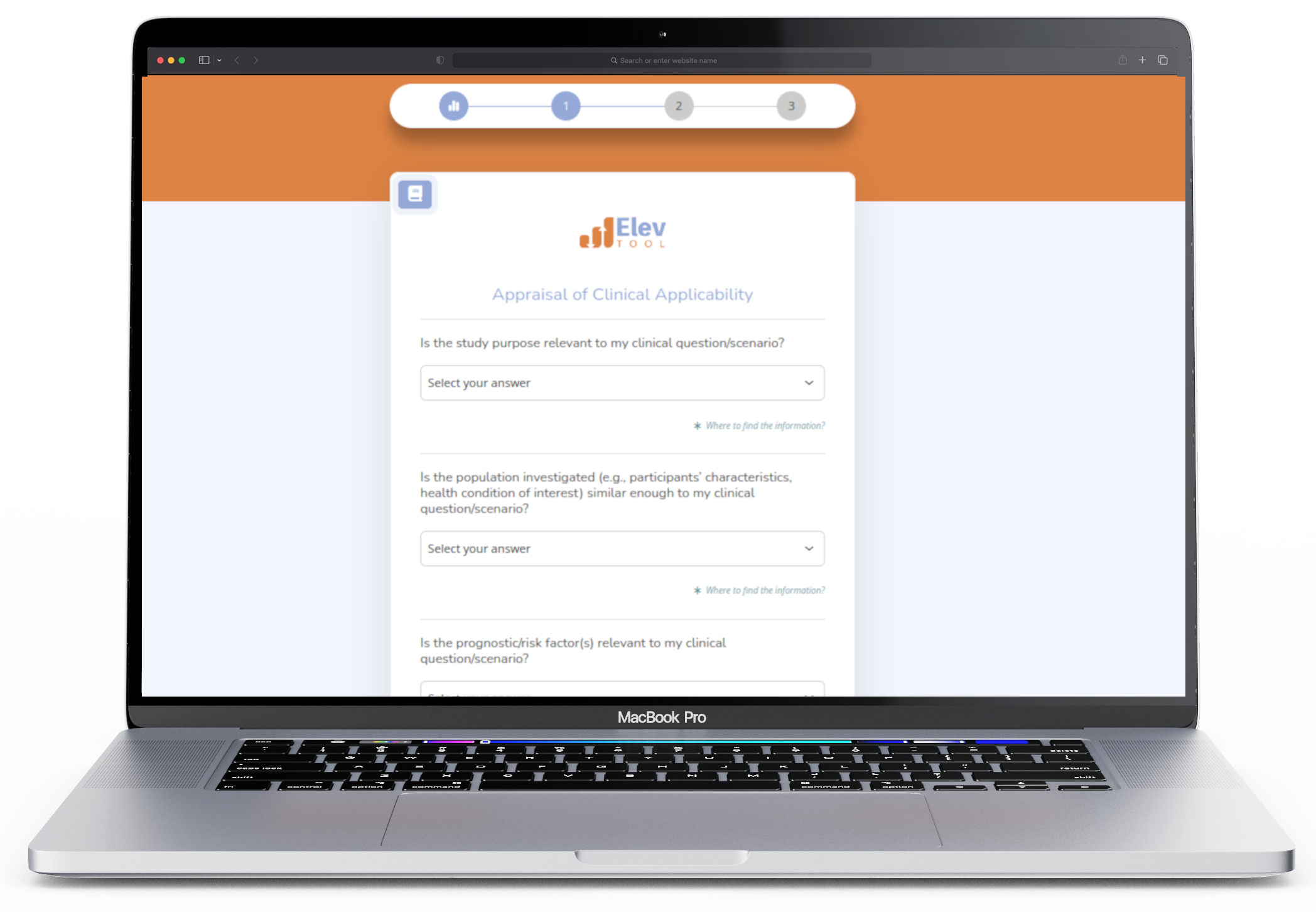

Numerous respected authors have created valid and reliable instruments to assist users in critically evaluating scholarly literature. However, these tools often employ language tailored more to researchers. Our tool addresses this by making subtle modifications to improve readability and interpretation. It also simplifies select aspects of the original tools to reduce the time needed for completion. Out of respect for the original author's work and to serve those desiring in-depth information, we provide links to the original tools.